The Datacenter GPU Gravy Train That No One Will Derail

We have five decades of very fine-grained analysis of CPU compute engines in the datacenter, and changes come at a steady but glacial pace when it comes to CPU serving. …

We have five decades of very fine-grained analysis of CPU compute engines in the datacenter, and changes come at a steady but glacial pace when it comes to CPU serving. …

Since the advent of distributed computing, there has been a tension between the tight coherency of memory and its compute within a node – the base level of a unit of compute – and the looser coherency over the network across those nodes. …

The exorbitant cost of GPU-accelerated systems for training and inference and latest to rush to find gold in mountains of corporate data are combining to exert tectonic forces on the datacenter landscape and push up a new Himalaya range – with Nvidia as its steepest and highest peak. …

In a world where allocations of “Hopper” H100 GPUs coming out of Nvidia’s factories are going out well into 2024, and the allocations for the impending “Antares” MI300X and MI300A GPUs are probably long since spoken for, anyone trying to build a GPU cluster to power a large language model for training or inference has to think outside of the box. …

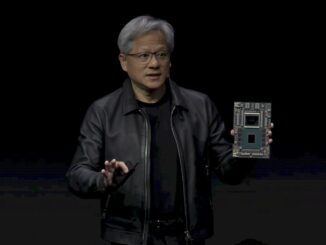

Heaven forbid that we take a few days of downtime. When we were not looking – and forcing ourselves to not look at any IT news because we have other things going on – that is the moment when Nvidia decides to put out a financial presentation that embeds a new product roadmap within it. …

If there is one thing that is absolutely immune from inflationary curbs and that is, to a certain degree, also contributing to inflationary pressures in the global economy, it is generative AI. …

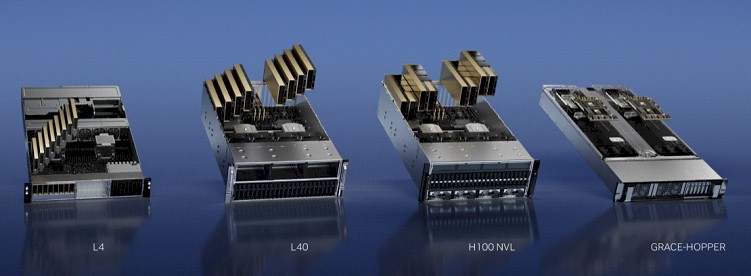

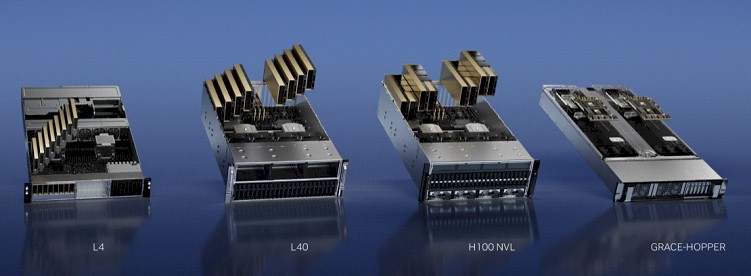

In a world where Nvidia is allocating proportional shares of its GPU hotcakes to all of the OEMs and ODMs, companies like Dell, Hewlett Packard, Lenovo, and Supermicro get their shares and then they turn around and try to sell systems using them at the highest possible price. …

If large language models are the foundation of a new programming model, as Nvidia and many others believe it is, then the hybrid CPU-GPU compute engine is the new general purpose computing platform. …

Updated With More MGX Specs: Whenever a compute engine maker also does motherboards as well as system designs, those companies that make motherboards (there are dozens who do) and create system designs (the original design manufacturers and the original – get a little bit nervous as well as a bit relieved. …

UPDATED Like many HPC and AI system builders, we are impatient to see what the “Antares” Instinct MI300A hybrid CPU-GPU system on chip from AMD might look like in terms of performance and price. …

All Content Copyright The Next Platform